ANNEX¶

A - WSDL definition¶

(informative)

General WSDL definition¶

In WSDL the normative part shall apply only to method names and input and output message type definition: URLs path and internal variable names definition may vary for any implementation.

Snapshot Pull SOAP WSDL definition¶

The machine readable file for this specification can be downloaded as SnapshotPull.wsdl

Snapshot Push SOAP WSDL definition¶

The machine readable file for this specification can be downloaded as SnapshotPush.wsdl

Simple Push SOAP WSDL definition¶

The machine readable file for this specification can be downloaded as SimplePush.wsdl

Stateful Push SOAP WSDL definition¶

The machine readable file for this specification can be downloaded as StatefulPush.wsdl

Simple CIS SOAP WSDL definition¶

The machine readable file for this specification can be downloaded as SimpleCIS.wsdl

This WSDL implements both Service Requester and Service Provider WSDL interface, which can be implemented separately on each agent interface.

Stateful CIS WSDL definition¶

The machine readable file for this specification can be downloaded as StatefulCIS.wsdl as StatefulCIS.wsdl

This WSDL implements both Service Requester and Service Provider WSDL interface, which can be implemented separately on each agent interface.

Full Exchange WSDL definition¶

Despite all services are implemented in each FEP+EP defined pattern, it’s possible to combine Push, Pull Services as well as CIS Service Request and Feedback services in the same webserver for a node acting both as a client and as provider. For this purpose a “FULL Exchange” WSDL interface definition is provided as a merging of all methods implemented in the various FEP+EP pattern. It is intended for management of exchange information FEP + EP and implementation of their features, the specification which are defined at each single FEP+EP pattern applies.

The machine readable file for the extended union of all FEP+EP implementation described in this document can be downloaded as Full Exchange WSDL

C - “Snapshot Pull with simple http server” profile definition¶

(normative)

Overall presentation¶

This profile is compliant to Snapshot Pull FEP+EP PIM, it is derived from DATEX II version 2.0 Exchange Specification and it states all requirements to implement Snapshot Pull by simple http server profile, i.e. implemented by pure http/get, within the following clauses which implement all PIM specified features.

Snapshot Pull FEP+EP PIM defines the “pull message” received to be a “MessageContainer” according to Basic Exchange Data Model defined in Exchange 2020 PIM Annex C, which is implemented in XSD schema defined in this document Annex B.

Despite this rule, which always applies to Snapshot Pull implemented by WSDL, some implementation of “Snapshot Pull with simple http server” may consider to implement as delivery only the “PayloadPublication” without wrapping it in a “MessageContainer”, this is assumed implicititely defined by subscription agreement among client and suppliers.

This clause uses the term “Message” to refer either a “Message Container” or a single “Payload Publication”.

Describing payload and interfaces¶

The profile can handle different information to be delivered (such as different payloads), named information products by assigning a specific URL (potentially plus access credentials) per information product.

The information product itself is denoted by all but one path segments in the URL, while the ‘filename’ (i.e. the middle path segment) is “content.xml” per definition. This convention was introduced to allow the definition of related meta-data for this information product in other files in the same directory.

The end of this clause may sound awkward. It strives at maintaining all the regulations of the URI RFC, thus not constraining URLs for information products, but incorporating the need to have the final path element (the ‘filename’) being “content.xml” by convention.

To support authentication, the servers has to provide the credentials required to perform authentication for any particular information product.

Server requiring authentication MUST provide the required access credentials for BASIC authentication (i.e. user name & password) together with the URL for the Information Product.

Metadata for link management¶

Scenarios exist where the “If-Modified-Since” mechanism is not preferred to avoid redundant downloads. This will happen if the server provides information products by updating files on a standard, file-based www server (like Apache or Microsoft Internet Information Servers). In this option, the server would have two possible update regimes:

Periodical update of the information product’s payload file independent from any changes in the data.

Update the information product’s payload file on demand, when changes relevant to this product have occurred in the server’s database.

With updating regime 1, the file based www server would cyclically send the file content to the clients, as he will derive the last-modified value from the file modification times. So the client would receive redundant downloads!

Implementation based upon standard www servers and files as information products SHOULD update information product payload files only when their traffic domain content has changed.

This means that the file may stay unmodified for some time after an update. This regime has one serious drawback: the Client will not be able to determine whether the file remains unchanged because the (road) traffic situation is stable or because the backend server system itself (not the WWW server) is not operating properly. In fact, the response of the (intermediate) WWW system does not have the quality of an end-to-end acknowledgement.

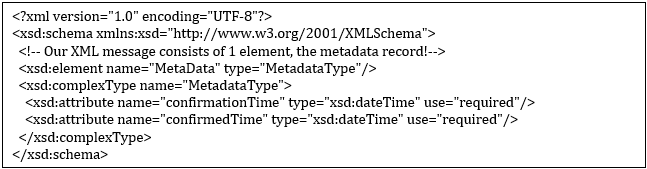

This information is given in a small XML document that is periodically updated, even if the (potentially huge) content file is unchanged. The file is required to refer to an XML Schema that contains an Element called MetaData as root element with two required attributes of type xsd:dateTime called confirmationTime and confirmedTime, with confirmationTime giving the time the acknowledgement was created and confirmedTime giving a value equal to the value the Last-Modified header field would have if the payload file (i.e. content.xml) had been requested.

The following XML Schema gives a valid example:

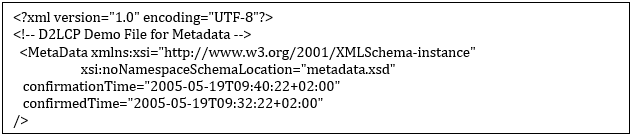

The acknowledgement file shall be put in the information product’s directory, besides the content.xml file containing the payload, with a filename of metadata.xml. A sample file for the schema above would be:

This leads to the following specifications:

Implementation based upon standard www servers and files as information products SHOULD provide a cyclically updated acknowledgement, accessible as:

D2LCP_infop_ack = “http:” “//” host [ “:” port ] infop_path “/metadata.xml” [“?” query]

If an acknowledgement is provided, it SHALL be a well-formed XML document with a XML Schema reference. The acknowledgement SHALL validate successfully against the referenced XML Schema.

The XML Schema referenced by an acknowledgement SHALL contain a root element called “MetaData”. This element SHALL contain two attributes, one named “confirmationTime” and one named “confirmedTime”, both of type “xsd:dateTime”.

If used, acknowledgements shall be updated cyclically, with best effort update cycle, but not less than once every three minutes.

An acknowledgement update SHALL indicate that the data server is operating properly at the time it is generated and that the content of that payload file – as last updated with modification time givenin the “confirmedTime” attribute of the “MetaData” – element iscurrently still valid.

The “confirmationTime” attribute of the “MetaData” element SHALL contain the current time when an acknowledgement is updated.

The “confirmedTime” attribute of the “MetaData” element SHALL contain the same value that a HTTP RESPONSE to an authorised HTTP REQUEST issued at the time of writing the acknowledgement would contain in the “Last-Modified” header field.

A server that is based upon standard WWW servers and files as information products SHALL indicate in the subscription negotiation process whether the acknowledgement option is supported.

Clients SHOULD use the acknowledgement option – if provided – to determine whether payload download is required.

Use of HTTP¶

This profile exchange uses HTTP Request (GET or POST) / Response dialogs to convey payload and status data from the Supplier to the Client. Note though that this profile supports POST requests only for interoperability with the Web services profile based on SOAP over HTTP exchange Information potentially included in the body of an HTTP POST request, is not processed.

This section stipulates how to use HTTP options for the Suppliers and the Clients of this profile.

Basic request / response pattern¶

Suppliers and Clients SHALL use the HTTP/1.1 protocol. Clients and Suppliers shall fully comply with the HTTP/1.1 protocol specification in RFC 2616, as of June 1999.

This protocol is based upon a request / response pattern, where the request can take one of several possible forms, among them the GET and POST methods for retrieving data. The GET and POST differ essentially in how the parameters of a request can be conveyed to the Supplier. While these parameters are conveyed as part of the URL in the HTTP GET request, the POST request allows specifying an “entity” (i.e. a message body) that contains these parameters, thus enabling less restricted parameters. POST requests were originally intended for server upload functionality.

As the specification foresees no complex request parameters, HTTP GET requests are preferred. Since other exchange systems sometimes require HTTP POST requests, they are also accepted. Nevertheless, it is not the intention to establish another exchange protocol layer on top of HTTP, and thus systems are not obliged to process the content of the body of an HTTP POST request.

Note

Systems MAY decide not to process the body of HTTP POST requests!

Clients SHALL use the HTTP GET or POST method of the HTTP REQUEST message to request data from the Supplier

The HTTP GET or POST request is served by the Supplier by generating an HTTP response message. The “Message” conveyed in this response is passed in the entity-body.

Note

It should be noted thought that for interoperability reasons (in particular with Web Services profile Clients that require a SOAP wrapper around the XML payload) the profile does not stipulate the “Payload Publication” or the “Message Container” to be the root element of the XML content.

Suppliers SHALL use an HTTP RESPONSE message to respond to requests. If applicable, one “Message” is contained in the entity-body.

Suppliers SHALL NOT respond to HTTP REQUEST messages using the GET or POST methods by responding with 405 (Method Not Allowed) or 501 (Not Implemented) return codes.

All communication is initiated by the client. Any data flow from supplier to client can only happen as the Supplier’s response to a Client’s request. When requesting data, the Client is not able to know whether the data he would receive would be exactly the same as the one he had received in response to his last request. This would lead to a serious amount of redundant network traffic, with potential undesired impact on communication charges and supplier/client workload. HTTP supports avoiding this by letting the Client specify the modification time of the last received update of a resource in an HTTP header field (If-Modified-Since). If no newer data is available, the response message will consist only of a header without an entity, stating that no new data is available. Clients are therefore recommended to set this header field in case they already hold reasonably recent information from the accessed URL.

Suppliers shall set the ‘Last-Modified’ header field in HTTP RESPONSE messages that provide payload data (response code 200) to the value that the information product behind the URL was last updated.

Clients may set the ‘If-Modified-Since’ header field in all HTTP REQUEST messages if they already hold a consistent set of data from a particular URL in their database and the last modification time of that data is known from the ‘Last-Modified’ header field of the HTTP header of the HTTP RESPONSE message within which the payload data was received.

It shall be understood that the semantics of the timestamps used within the If-Modified-Since header field are calculated in the server. Therefore, times generated by the Client according to his own system clock may not be used here but must be filled using the content of the Last-Modified header field of the most recently received HTTP RESPONSE message. If Clients connect to a resource for the first time or want to resynchronise, they simply don’t set this header field.

When setting the ‘If-Modified-Since’ header field, the Client SHALL copy the value of the ‘Last-Modified’ header field received within the last successful HTTP RESPONSE containing a message (response code 200) into this field.

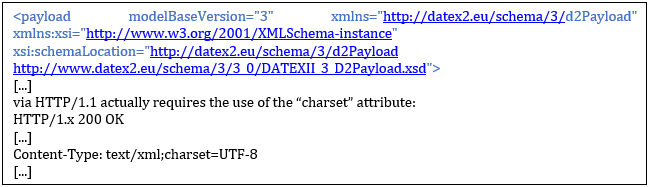

Suppliers SHOULD provide XML coded DATEX II payload as “text/xml” media type. Suppliers SHOULD state the used character set via the “charset” parameter; Suppliers SHOULD use the UTF-8 character set, i.e. the “Content-Type” response-header field SHOULD state “text/xml; charset=utf-8”.

Character sets, media types, etc. are a vast area that is notoriously underestimated as a source of potential interoperability problems. This clause aims at recommending the most widely used set of options, namely the use of the UTF-8 character set for XML payload. The “text/xml” is preferred to “application/xml” following a recommendation in RFC 3023 (“If an XML document – that is, the unprocessed, source XML document – is readable by casual users, text/xml is preferable to application/xml.”). Although in principle the profile is solely devoted to B2B communication, readability of the exchange payload has often proved to be beneficial for testing and educational purposes.

It should be noted that omitting the “charset” attribute in HTTP/1.1 for “text/*” type content implies the use of “ISO-8859-1” which is different from the UTF family (UTF-8, UTF-16) that are the minimum requirement for XML processors, and can thus be seen as the de-facto standard for XML. Consequently, sending typical XML payload like this:

If “text/xml” without parameter would be sent, HTTP/1.1 would be violated.

Note

Quote from RFC 2616: “When no explicit charset parameter is provided by the sender, media subtypes of the “text” type are defined to have a default charset value of “ISO-8859-1” when received via HTTP. Data in character sets other than “ISO-8859-1” or its subsets MUST be labelled with an appropriate charset value.”

Clients MUST accept “identity” content-coding; Clients SHOULD (and if they do, prefer to) accept “gzip” content-coding; Clients MAY accept other “content-coding” values registered by the Internet Assigned Numbers Authority (IANA) in their content-coding registry as long as they also accept “identity” and “gzip” content-coding.

When including an “Accept-Encoding” request-header field in an HTTP REQUEST message, the Client MUST NOT exclude acceptance of “identity” content-coding.

Suppliers MUST provide “identity” content-coding of the payload; Suppliers SHOULD provide “gzip” content-coding of the payload; Suppliers MAY provide other “content-coding” values registered by the Internet Assigned Numbers Authority (IANA) in their content-coding registry as long as they also provide “identity” and “gzip” content-coding.

This set of clauses essentially ensures that a confused situation where the Supplier is not able to provide payload in a content-coding that the client understands can not exist, as all suppliers are enforced to support “identity” (which means unmodified text/xml content) content-coding, and clients are enforced to understand this content-coding.

Furthermore, these clauses include a policy that recommends the use of compression and ensures that compression is always interoperable because it requires all clients/suppliers that do support compression to support “gzip” at least as an option. This means that:

All client/supplier interaction will work at least with “identity”

Clients/Suppliers supporting compression will always be able to agree on “gzip”

Clients can request preferred other compression (“deflate” or “compress”), and Suppliers will respond accordingly if they support these content-codings.

Implementers should be aware that non-transparent web caches may perform media type transformations on behalf of their clients. Thus Client running over such a cache might notice that compressed response content is automatically decompressed. However this is only problematic if there is a low bandwidth connection between the client and the cache, such as a dial up access point. If a service has a significant number of such users then the addition of the no-transform directive to the Cache-Control header field of the generated responses should be considered. For more details on the use of the Cache-Control field, please consult Section 14.9 of RFC 2616.

Servers may use the ‘no-transform’ directive in the ‘Cache-Control’ header field to avoid non-transparent caches from sending non-compressed content.

Authentication¶

Authentication is supported, i.e. only users with explicit permission are allowed to download payload data. The required access credentials have to be provided to the Client as part of the outcome of the DATEX II subscription creation with the content Supplier, which is here an offline process. The specifications make use of the simplest and most widely spread authentication scheme for HTTP, i.e. BASIC authentication.

Note

This scheme is not seen as sufficiently strong for commercial strength business and safety relevant application, as the password is not encrypted during transmission. Applications that fit into this description will either have to use other exchange profiles or they will need to establish a sufficiently secure transport layer below DATEX II Exchange.

The Client receives a username and password together with a URL which identifies a specific publication from the server. During access, the Client then builds a single string from this (“<username>:<password>”) and encodes it according to base64 encoding rules. The result is put into the Authorization header field of the HTTP REQUEST message.

Clients SHOULD fill access credentials they MAY have received during the subscription negotiation process into the ‘Authorization’ header field of the HTTP REQUEST message.

Server providing access credentials (username & password) during the subscription negotiation phase MAY respond with response code 401 (Unauthorized) to HTTP REQUESTS that do not contain valid access credentials in the ‘Authorization’ header field

Additional rules¶

The regulations in the previous section are a clarification on how to use standard features of HTTP/1.1 according to RFC2616.

This sub-clause contains additional regulations that go beyond ‘pure’ HTTP. Anyway, the regulations presented so far have to be seen as clarifications on top of RFC2616. They are compliant with the standard and have to be used in conjunction with the standard itself. This principle holds especially for the handling of HTTP return codes. The following clause summarises the main return codes as used in connections and refers to the standard for the handling of further codes.

Servers SHALL produce and Clients SHALL process the following return codes:

200 (OK), in responses carrying payload,

304 (Not Modified), if no payload is sent because of the specification in the ‘If-Modified-Since’ header,

503 (Service Unavailable), if an active HTTP server is disconnected from the content feed,

404 (Not Found), if a file-based HTTP server does not have a proper payload document stored in the place associated to the URL.

Servers SHOULD produce and Clients SHOULD process the following return codes:

401 (Unauthorised), if authentication is required but not presented in the request, or if invalid authentication is presented in the request,

403 (Forbidden), if the requested operation is not successful for any other reason.

Servers & Client SHALL apply an RFC2616 compliant regime for producing / handling all other return code.